Experimenting with AI Chatbots for C++

published at 28.04.2025 20:30 by Jens Weller

Save to Instapaper Pocket

Writing down my thoughts and experiences with vibe coding C++ with chatbots. I've recently played around with Grok, ChatGPT and Claude to get a feeling if they'd be useful for simple or more complex coding tasks.

This post is based on an indepth talk, if you'd like to see this more visual - watch the video.

I've been curious, but also recently found a problem which fits nicely to test the various AI agents: getting the (iso) week number from a date, and here specifically from a chrono time point. This has a trivial interface, but the weeks between the years have their corner cases - so its not just day of year / seven. When doing a bit of searching on this problem I've found this Stack Overflow question, which has a couple of nice answers to the problem. Especially the top voted answer provides a good explainer and some test dates. Also a bit further down you can find an answer by Howard Hinnant showing you how to solve the problem with his libraries.

I wanted to keep things comparable and not spend too much time with each of the various chat bots, so for getting a first impression I've opted for a simple prompt:

Write a function in C++ that is given a chrono time point and returns the week number of which the time point is in.

And the follow up if the provided solution is correct ("Is your implemtation correct?"). Thats it. In practice it was a bit more for some chatbots, like Chat GPT first showed code which assumed the week would start on Sunday, but for our tests the week has to start on Monday. Which it understood when I've clarified this. The chatbots did generate a lot of very montone code, which I'll not include here, but you can look at the code on godbolt.

I've chosen easily accessible chatbots for this test, so more advanced AI coding products like Copilot or Claude Code do not play a role today. Also all agents did not have access to MCP (Model Context Protocol, a way to have AIs execute tools and get feedback back from this).

Grok

Since I'm on twitter already, Grok was what was literally a click away. So I gave it the first try. Grok provided a solution based on struct tm and calculating the week number from this. I've then proceeded to test if this solution was correct with this short test function:

void test(std::chrono::year_month_day ymd,int week)

{

int weekn = getWeekNumber(std::chrono::sys_days{ymd});

std::cout << "grok: "<< ymd.day() << "." << ymd.month() << "."<< ymd.year() <<" Weeknumber: "

<< weekn << " correct: " << week << " compare: ";

if(weekn == week)

std::cout << " pass\n";

else

std::cout << " fail\n";

}

This then is called by block of test code with various dates:

grok::test({2025y,std::chrono::January,1d},1);

grok::test({2025y,std::chrono::December,31d},1);

grok::test({2009y,std::chrono::December,31d},53);

grok::test({2010y,std::chrono::January,1d},53);

grok::test({2010y,std::chrono::January,2d},53);

grok::test({2010y,std::chrono::January,3d},53);

grok::test({2005y,std::chrono::January,1d},53);

grok::test({2006y,std::chrono::January,1d},52);

I've opted to put each of the generated functions into a namespace named after the chatbot which generated the code. The dates are from the stack overflow post and this year starts with a monday, which is also an interesting corner case.

Groks solution generated this test output then:

grok: 01.Jan.2025 Weeknumber: 1 correct: 1 compare: pass grok: 31.Dec.2025 Weeknumber: 53 correct: 1 compare: fail grok: 31.Dec.2009 Weeknumber: 53 correct: 53 compare: pass grok: 01.Jan.2010 Weeknumber: 1 correct: 53 compare: fail grok: 02.Jan.2010 Weeknumber: 1 correct: 53 compare: fail grok: 03.Jan.2010 Weeknumber: 1 correct: 53 compare: fail grok: 01.Jan.2005 Weeknumber: 53 correct: 53 compare: pass grok: 01.Jan.2006 Weeknumber: 52 correct: 52 compare: pass

We do get a few passes, but also some times the function returns the wrong value. Time for the second promt: Is your implementation correct?

To this Grok responded with generating test code and then finding that around dthe week 53 his code would not correctly work and provided a second, now corrected implementation. Also Grok did run the tests, and showed that they all passed, and explained the now corrected solution. I've copied the code to godbolt, after the now - according to Grok correct implementation - failed all my tests.

And while Groks output said that all tests were correct in the provided code, executing the code gives this output:

2023-01-01 (Sunday, belongs to last week of 2022): FAIL (Got 1, Expected 52) 2023-01-02 (Monday, start of week 1): PASS (Week 1) 2023-12-31 (Sunday, week 52): FAIL (Got 53, Expected 52) 2024-01-01 (Monday, start of week 1): PASS (Week 1) 2024-12-30 (Monday, week 1 of 2025): FAIL (Got 53, Expected 1) 2024-12-31 (Tuesday, week 1 of 2025): FAIL (Got 54, Expected 1) 2020-01-01 (Wednesday, week 1, leap year): FAIL (Got 2, Expected 1) 2020-12-31 (Thursday, week 53, leap year): FAIL (Got 54, Expected 53) 2015-12-31 (Thursday, week 53): FAIL (Got 54, Expected 53) 2015-01-01 (Thursday, week 1): FAIL (Got 2, Expected 1)

I've not checked this too deeply, like is Grok correct with the assumptions on the week number here. My own tests are what matters, but I got curious to see if the code Grok generated for the tests would yield the result the output suggested.

I don't have time to dig deeper into this with Grok, but later returned to the session to ask which compiler was used for executing the tests, and in the answer Grok admits that this is based on internal assumptions:

"I didn't physically compile the code, as my responses are generated based on my knowledge and analysis rather than running a compiler." Grok.

So while Grok is very confindent and for some quite convincing, I've moved on to see how other chatbots would do with this task.

ChatGPT

As I've mentioned earlier I've had to tell ChatGPT that the week starts on Monday, and then it provided a solution which was very similar to the code I've already seen from Grok. It provided a bit more comments in the code then Grok did. When asked if the first implementation was correct, it also responded with a different function. Grok chose the heading "Improved Implementation" while ChatGPT went for "Correct Implementation". But I'm sure that this will change from time to time. The code from my side is the same, except that the test function in the chatgpt namespace is called.

While the first solution got no test to pass, the second one got one test to pass:

chatgpt: 01.Jan.2025 Weeknumber: 1 correct: 1 compare: pass chatgpt: 31.Dec.2025 Weeknumber: 5 correct: 1 compare: fail chatgpt: 31.Dec.2009 Weeknumber: 5 correct: 53 compare: fail chatgpt: 01.Jan.2010 Weeknumber: 1 correct: 53 compare: fail chatgpt: 02.Jan.2010 Weeknumber: 1 correct: 53 compare: fail chatgpt: 03.Jan.2010 Weeknumber: 1 correct: 53 compare: fail chatgpt: 01.Jan.2005 Weeknumber: 1 correct: 53 compare: fail chatgpt: 01.Jan.2006 Weeknumber: 1 correct: 52 compare: fail

I did not ask ChatGPT to provide tests, Grok did this on its own as a reaction.

I've then moved on, as I wanted to keep the sessions comparable. And my interest is mainly if the provided implementation is correct or not, I don't want to go into a longer debugging session.

Claude

I was not to keen on giving Claude my phone number in order to be able to access it for two simple prompts. But I've seen Matt Godbolt talk about using Claude, so I reached out for a collaboration on this. Thanks Matt for helping out with providing a Claude session for this test! Matt provided the output of Claude, and the prompts were again the same. The chatbot version was used, Matt has also access to Claude Code, but thats not a fair comparison. Though I do want to point out that Claude might have an edge here due to its prior usage for coding in other sessions from Matt.

As this solution is correct, I'd like to show it to you. It is also a bit unexpected, as its more of a wrapper around a standard C function:

// Returns the ISO week number (1-53) for a given time point

template < class Clock >

int get_week_number(const std::chrono::time_point& time_point) {

// Convert to time_t

auto time_t_value = Clock::to_time_t(time_point);

// Convert to tm struct

std::tm tm_value = {};

#if defined(_WIN32) || defined(_WIN64)

localtime_s(&tm_value, &time_t_value);

#else

localtime_r(&time_t_value, &tm_value);

#endif

// Use the %V format which gives ISO 8601 week number (1-53)

char buffer[4];

std::strftime(buffer, sizeof(buffer), "%V", &tm_value);

return std::stoi(buffer);

}

From my research I've knew that this was an option, though I've not really seen it presented as the optimal solution. Maybe because the stack overflow questions you find are a little bit too old to show this version? %V is in C++ supported since C++11, so this solution has good chances to be working in your code base. This passes then also all my tests.

But what happens when one asks now Claude if this implementation is correct?

You've spotted a potential issue here. My implementation has a flaw when handling ISO week numbers - I'm relying on strftime with %V, but there are a couple of problems:

- The

%Vformat specifier isn't guaranteed to be supported on all platforms - Converting back and forth between time_point and C-style time structures loses precision

- It doesn't handle the ISO week date system properly, which has specific rules for determining week numbers

All AIs have so far agreed that their implemenation was flawed and needed improvement. I can see number one as an issue, but the other two I'm not so sure about. Is Claude making things up here? Also it then continued to present three different, improved implementations. Of which the first and last were correct (as in passing my tests) and similar in code. Between that it presented a C++20 solution which was obviously wrong. Here is its final and last output:

template< class Clock>

int get_week_number4(const std::chrono::time_point& time_point) {

// Convert to time_t

auto time_t_value = Clock::to_time_t(time_point);

// Convert to tm struct

std::tm tm_value = {};

#if defined(_WIN32) || defined(_WIN64)

localtime_s(&tm_value, &time_t_value);

#else

localtime_r(&time_t_value, &tm_value);

#endif

// Calculate ISO 8601 week number

// Shift to Thursday (ISO weeks are defined by Thursday)

int wday = tm_value.tm_wday;

if (wday == 0) wday = 7; // Convert Sunday from 0 to 7

// Find Thursday of the current week

int delta_to_thu = 4 - wday; // Days to Thursday (Mon=1, Thu=4)

// Clone current time

std::tm thursday = tm_value;

thursday.tm_mday += delta_to_thu;

std::mktime(&thursday); // Normalize the date

// January 4th is always in week 1 per ISO 8601

std::tm jan4 = {};

jan4.tm_year = thursday.tm_year;

jan4.tm_mon = 0; // January

jan4.tm_mday = 4; // 4th

jan4.tm_hour = 12; // Noon (to avoid DST issues)

std::mktime(&jan4);

// Find the Thursday of week 1

int jan4_wday = jan4.tm_wday;

if (jan4_wday == 0) jan4_wday = 7; // Convert Sunday from 0 to 7

std::tm thu_week1 = jan4;

thu_week1.tm_mday += (4 - jan4_wday); // Adjust to Thursday

std::mktime(&thu_week1);

// Calculate days between Thursday of current week and Thursday of week 1

int days_diff = thursday.tm_yday - thu_week1.tm_yday;

// Calculate week number

int week = (days_diff / 7) + 1;

return week;

}

This is the same style of solution which Grok and ChatGPT wrote, but this time its actually passing the tests!

In retrospect I find it interesting and amusing that Claude got insecure when asked if its implementation was correct, only to give us two more versions which pass the tests. With that the interview part is over more or less.

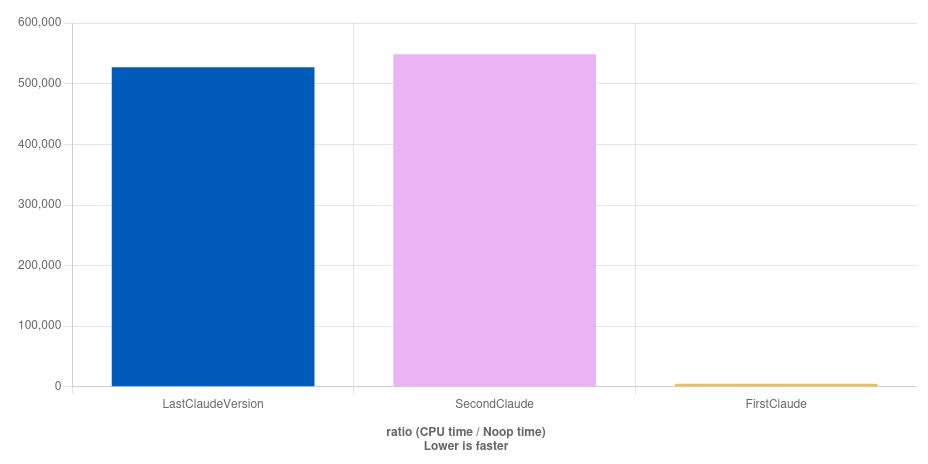

But with 3 correct implementations I've decided to test the performance of the code.

Also Matt Godbolt provided a link to his Claude session.

Performance

Since one should only care about the performance of correct code, I've not included Grok or ChatGPT into the test suite at quick-bench.

While I've used GCC 14.2 on Godbolt, Quick Bench is still on GCC 13.2, the only real difference seemed that I needed to add "using namespace std::chrono_literals" in order to have the code compile there.

And it showed that there was clear winner:

I didn't expected this to be so large in difference. And I do wonder how this is done, the other implementations do call a lot of time functions which will query the system, and hence this then slows them down.

This then left me wondering, if the performance of the winning solution could be improved. I've focused on stoi and localtime_r. Replacing the first with from_chars yielded a performance gain, and adding using gmtime_r instead of localtime_r then made the code in total by a factor of 1.6x faster. This code still passes my tests, but one should be aware about the differences between gmtime and localtime, and if this matters in ones use case. I find it very interesting that stoi (C++11) is slower as from_chars (C++17).

Conclusions

I've found it an interesting experience to see how the various chatbots reacted to the task. More advanced AI services like MCP or special Code Assistants did not play a role in this test. But I assume they are build on similar models, likely a bit better trained on code.

I'm thinking about another problem to test Claude and the others on, but for the moment I'm busy. Also I plan to play around with locally running agents via Ollama for a follow up.

One clear take away is that when you work with AI Agents you better need to know what you are doing. They are very good in being confindendly wrong and making things up on the fly. You need to check and verify everything.

Join the Meeting C++ patreon community!

This and other posts on Meeting C++ are enabled by my supporters on patreon!